1. Early life and education

Timnit Gebru's early life and academic pursuits were significantly shaped by her experiences with discrimination and a growing interest in the ethical dimensions of technology.

1.1. Birth and background

Gebru was born on May 13, 1983, and raised in Addis Ababa, Ethiopia. Both of her parents are from Eritrea, giving her Eritrean heritage. Her father, an electrical engineer with a PhD, passed away when she was five years old. She was subsequently raised by her mother, an economist, alongside her two older sisters, who also became electrical engineers.

1.2. Immigration and experiences in the US

At the age of 15, during the Eritrean-Ethiopian War, Gebru was forced to flee Ethiopia after some of her family members were deported to Eritrea and compelled to fight in the conflict. She initially faced difficulties obtaining a United States visa and briefly resided in Ireland before eventually being granted political asylum in the U.S. She described this period as "miserable."

Upon settling in Somerville, Massachusetts, to attend high school, Gebru immediately encountered racial discrimination. Despite being a high-achieving student, some teachers refused to allow her to enroll in certain Advanced Placement courses. A pivotal moment that steered her towards technology ethics occurred after she completed high school. When she called the police to report an assault on a Black female friend in a bar, her friend was arrested and remanded to a cell instead of the assailant. Gebru characterized this incident as a "blatant example of systemic racism," profoundly influencing her perspective on the systemic biases embedded within society and, by extension, technology. In 2008, she actively supported Barack Obama's presidential campaign by canvassing.

1.3. Academic and early research career

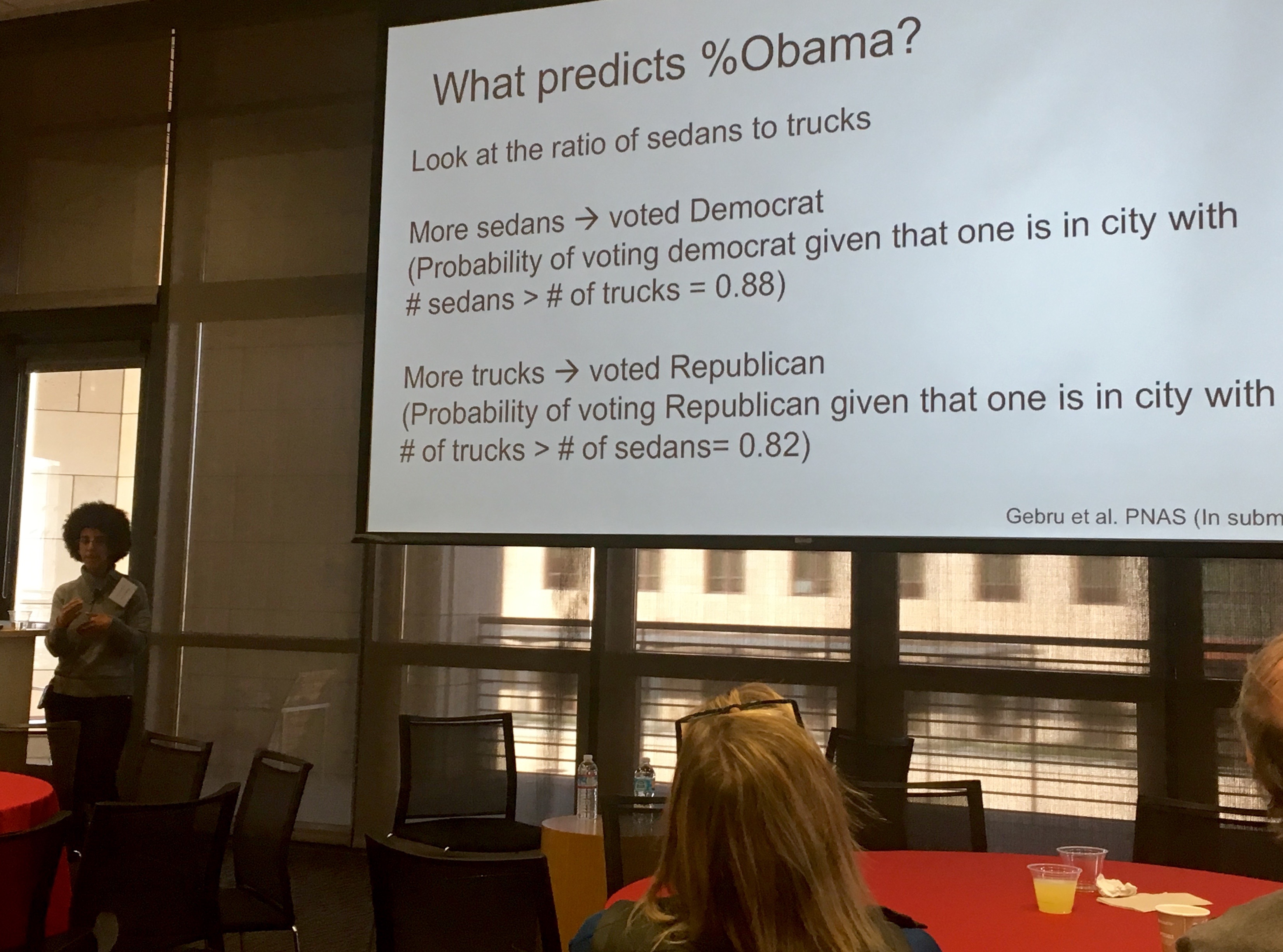

In 2001, Gebru was accepted into Stanford University, where she earned both her Bachelor of Science and Master of Science degrees in electrical engineering. She later completed her PhD in computer vision in 2017 under the supervision of Fei-Fei Li. Her doctoral research involved the data mining of publicly available images. She was particularly interested in finding alternatives to the costly methods governmental and non-governmental organizations used to collect community information. To this end, Gebru combined deep learning techniques with Google Street View data to estimate the demographics of neighborhoods across the United States. Her research demonstrated that socioeconomic attributes, such as voting patterns, income, race, and education, could be inferred from observations of cars. For instance, her study found that communities with more pickup trucks than sedans were more likely to vote for the Republican party. This work, which analyzed over 15 million images from the 200 most populated U.S. cities, garnered significant media attention from outlets like BBC News, Newsweek, The Economist, and The New York Times.

Gebru presented her doctoral research at the 2017 LDV Capital Vision Summit competition, where she emerged victorious, leading to a series of collaborations with other entrepreneurs and investors. During her PhD program in 2016 and again in 2018, she returned to Ethiopia to participate in Jelani Nelson's AddisCoder programming campaign.

While pursuing her PhD, Gebru authored an unpublished paper expressing her concerns about the future of AI. Drawing on her experiences with the police and a ProPublica investigation into predictive policing that exposed the projection of human biases into machine learning, she highlighted the dangers posed by the lack of diversity in the AI field. In this paper, she sharply criticized the "boy's club culture" prevalent in the industry, reflecting on instances of sexual harassment she experienced at conferences and the hero worship of prominent figures in the field.

2. Career

Timnit Gebru's career has been marked by significant contributions to both hardware and software development, culminating in her pivotal role in the field of AI ethics and her subsequent establishment of an independent research institute.

2.1. Early career at Apple and Microsoft

Gebru began her professional journey as an intern at Apple while still at Stanford in 2004, working in their hardware division on circuitry for audio components. The following year, she was offered a full-time position as an audio engineer. Her manager at the time described her as "fearless" and well-liked by her colleagues. During her tenure at Apple, Gebru developed a keen interest in software development, particularly computer vision systems capable of detecting human figures. She went on to develop signal processing algorithms for the first iPad. At the time, she noted that she did not consider the potential for surveillance, finding the technical challenge inherently interesting.

Years after leaving Apple, during the #AppleToo movement in the summer of 2021, Gebru publicly stated that she had experienced "so many egregious things" at the company. She criticized Apple's lack of accountability and the media's role in shielding major tech companies from public scrutiny, asserting that such practices could not continue indefinitely.

In 2013, while pursuing her PhD, Gebru joined Fei-Fei Li's lab at Stanford, where her research focused on data mining publicly available images. She was particularly interested in developing alternatives to the expensive methods used by governmental and non-governmental organizations to collect community information. Her groundbreaking work combined deep learning with Google Street View data to estimate the demographics of U.S. neighborhoods, demonstrating that socioeconomic attributes like voting patterns, income, race, and education could be inferred from observations of cars. For example, her research indicated that communities with a higher number of pickup trucks compared to sedans were more likely to vote for the Republican party. This study, which analyzed over 15 million images from the 200 most populous U.S. cities, received extensive coverage from major media outlets including BBC News, Newsweek, The Economist, and The New York Times.

In the summer of 2017, Gebru joined Microsoft as a postdoctoral researcher in the Fairness, Accountability, Transparency, and Ethics in AI (FATE) lab. During her time there, she spoke at the Fairness and Transparency conference, where she was interviewed by MIT Technology Review about biases in AI systems and how increased diversity in AI teams could mitigate these issues. In response to a question about how a lack of diversity distorts AI, particularly computer vision, Gebru highlighted the inherent biases of software developers. While at Microsoft, she co-authored the influential research paper Gender Shades with Joy Buolamwini. This paper became the namesake of a broader project at the MIT Media Lab and revealed significant accuracy disparities in commercial facial recognition software, finding that Black women were 35% less likely to be recognized than White men in one particular implementation.

2.2. AI ethics research at Google

Gebru joined Google in 2018, where she co-led the Ethical Artificial Intelligence team alongside Margaret Mitchell. Her work focused on studying the implications of artificial intelligence and enhancing technology's capacity to contribute to social good.

In 2019, Gebru and other AI researchers signed a letter urging Amazon to cease selling its facial recognition technology to law enforcement agencies, citing its demonstrated bias against women and people of color. This call was supported by an MIT study that showed Amazon's facial recognition system had greater difficulty identifying darker-skinned females compared to other technology companies' software. In an interview with The New York Times, Gebru further articulated her conviction that, at present, facial recognition technology is too dangerous for use in law enforcement and security applications.

2.3. "Stochastic Parrots" paper and departure from Google

In 2020, Gebru co-authored a seminal paper titled "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜" with five other researchers, including Emily M. Bender, who was the only co-author not employed by Google at the time. The paper rigorously examined the inherent risks associated with very large language models (LLMs), detailing concerns such as their substantial environmental footprint, considerable financial costs, the inherent inscrutability of large models, their potential to exhibit and perpetuate prejudice against specific demographic groups, their fundamental inability to truly understand the language they process, and the significant risk of LLMs being exploited to spread disinformation.

In December 2020, Gebru's employment with Google ended under controversial circumstances. Google management requested that she either withdraw the "Stochastic Parrots" paper before its publication or remove the names of all Google employees from the author list. According to Jeff Dean, Google's head of AI research, the paper was submitted without adhering to Google's internal review process, and the company asserted it had overlooked too much relevant research on mitigating the described problems, particularly regarding environmental impact and bias. In response, Gebru requested the identities and feedback of every reviewer involved in the decision, along with guidance on how to revise the paper to meet Google's satisfaction. She stated that if this information was not provided, she would discuss "a last date" for her employment with her manager. Google immediately terminated her employment, claiming they were accepting her resignation. Gebru, however, consistently maintained that she had not formally offered to resign but had only threatened to do so.

Following her departure, Jeff Dean publicly released an internal email defending Google's research paper process, stating the company's commitment to tackling ambitious problems responsibly. Gebru and her supporters contended that this initial public statement, coupled with Dean's subsequent silence, fueled a campaign of harassment against her. She was subjected to racist and obscene comments on Twitter from numerous sock puppet accounts and internet trolls. Gebru and her allies alleged that some of this harassment originated from machine learning researcher Pedro Domingos and businessman Michael Lissack, who had publicly dismissed her work as "advocacy disguised as science." Lissack was also accused of harassing Gebru's former colleagues, Margaret Mitchell and Emily M. Bender, leading to the temporary suspension of Lissack's Twitter account in February 2021.

Gebru's assertions that she was fired rather than resigned garnered widespread support. Nearly 2,700 Google employees and over 4,300 academics and civil society supporters signed a letter condemning her alleged termination. Nine members of the U.S. Congress sent a letter to Google, demanding clarification on the circumstances surrounding her exit. Gebru's former team at Google called for Vice President Megan Kacholia to be removed from their management chain and for both Kacholia and Dean to apologize for Gebru's treatment. It was alleged that Kacholia had terminated Gebru's employment without first notifying Gebru's direct manager, Samy Bengio. Margaret Mitchell, Gebru's former co-lead, publicly criticized Google's handling of employees working to address bias and toxicity in AI, leading to her own termination by Google for alleged exfiltration of confidential documents and private employee data.

In the wake of the negative publicity, Sundar Pichai, CEO of Alphabet Inc., Google's parent company, issued a public apology on Twitter, though he did not clarify whether Gebru had been terminated or resigned. He initiated a months-long investigation into the incident. Upon its conclusion, Dean announced that Google would alter its "approach for handling how certain employees leave the company" and implement changes to the review process for research papers on "sensitive" topics. Additionally, diversity, equity, and inclusion goals would be reported quarterly to Alphabet's board of directors. Gebru responded on Twitter, stating she "expected nothing more" from Google, and pointed out that the announced changes were precisely what she had advocated for, yet no one was held accountable for her alleged termination. Two Google engineers subsequently resigned from the company.

A forum held by Google to discuss experiences with racism within the company was reportedly spent largely discrediting Gebru, which employees perceived as Google making an example of her for speaking out. This forum was followed by a group psychotherapy session for Google's Black employees with a licensed therapist, which employees found dismissive of the harm they felt Gebru's alleged termination had caused.

In November 2021, the Nathan Cummings Foundation, in partnership with Open MIC and endorsed by Color of Change, filed a shareholder proposal advocating for a "racial equity audit" to analyze Alphabet's "adverse impact" on "Black, Indigenous and People of Color (BIPOC) communities." The proposal also sought an investigation into whether Google retaliated against minority employees who raised concerns about discrimination, explicitly citing Gebru's firing, her previous calls for Google to hire more BIPOC individuals, and her research into racially based biases in Google's technology. This proposal followed a less formal request from a group of Senate Democratic Caucus members, led by Cory Booker, earlier that year, which also referenced Gebru's separation from the company and her work. In December 2021, Reuters reported that Google was under investigation by the California Department of Fair Employment and Housing (DFEH) regarding its treatment of Black women, following numerous formal complaints of discrimination and harassment from current and former workers. Gebru and other BIPOC employees reported that when they brought their experiences with racism and sexism to Human Resources, they were advised to take sick leave and seek therapy through the company's Employee Assistance Program (EAP). Gebru and her supporters believe her alleged dismissal was retaliatory and indicative of institutional racism within Google. Google stated that it "continue[s] to focus on this important work and thoroughly investigate[s] any concerns, to make sure [Google] is representative and equitable."

2.4. Founding of the Distributed Artificial Intelligence Research Institute (DAIR)

In June 2021, Gebru announced her intention to raise funds to establish an independent research institute, modeled on her work with Google's Ethical AI team and her experiences with Black in AI. On December 2, 2021, she officially launched the Distributed Artificial Intelligence Research Institute (DAIR). The institute's core mission is to document the effects of artificial intelligence on marginalized groups, with a particular focus on Africa and African immigrants in the United States. One of DAIR's initial projects involves analyzing satellite imagery of townships in South Africa using AI to gain a deeper understanding of the legacies of apartheid.

2.5. Criticism of TESCREAL and AI futurism

Gebru, in collaboration with Émile P. Torres, coined the acronym TESCREAL to critique a cluster of overlapping futurist philosophies: transhumanism, extropianism, singularitarianism, cosmism, rationalism, effective altruism, and longtermism. She views these philosophies as a right-leaning influence within Big Tech and draws parallels between their proponents and the eugenicists of the 20th century, arguing that they produce harmful projects while portraying them as beneficial to humanity. Gebru has specifically criticized research into artificial general intelligence (AGI), asserting that it is rooted in eugenics. She advocates for a shift in focus away from AGI, deeming its pursuit an inherently unsafe practice.

3. Key research areas and contributions

Timnit Gebru's research has significantly advanced the understanding and mitigation of biases in AI systems, focusing on fairness, the limitations of facial recognition technology, and the ethical implications of large language models.

3.1. Algorithmic bias and fairness

Gebru's work extensively identifies and addresses biases inherent in AI systems. Her research explores methodologies for promoting fairness and equity in algorithmic design and deployment. She emphasizes that biases in AI often stem from biases present in the training data and the developers themselves, leading to discriminatory outcomes, particularly for underrepresented groups. Her contributions include developing frameworks and tools to audit AI systems for bias and advocating for diverse development teams to prevent such issues.

3.2. Facial recognition technology and gender/racial disparities

A cornerstone of Gebru's research is her "Gender Shades" project, conducted in collaboration with Joy Buolamwini. This groundbreaking study meticulously highlighted significant accuracy disparities in commercial facial recognition systems based on gender and race. It revealed that these systems performed significantly worse when identifying darker-skinned females, sometimes failing to recognize them at a rate 35% higher than white males. This research provided empirical evidence of algorithmic bias in widely used technologies and underscored the critical need for more equitable and robust AI development. Based on these findings, Gebru has been a vocal proponent against the use of facial recognition technology by law enforcement and for security purposes, arguing that its current biases make it too dangerous for such applications.

3.3. Risks of Large Language Models (LLMs)

Gebru's influential "Stochastic Parrots" paper, co-authored with Emily M. Bender and others, brought to light several critical risks associated with the development and deployment of large language models (LLMs). The paper detailed concerns regarding the substantial environmental costs associated with training these massive models, their significant financial costs, and their inherent inscrutability, which makes it difficult to understand how they arrive at their outputs. Furthermore, the research highlighted the potential for LLMs to perpetuate and amplify existing societal biases, their fundamental inability to genuinely comprehend the language they process, and the risk of their misuse for generating and spreading disinformation. This paper sparked a major controversy at Google, leading to Gebru's departure, but it also catalyzed a broader discussion within the AI community about responsible LLM development.

3.4. AI ethics and societal impact

Beyond specific technical biases, Gebru's work broadly examines the ethical and societal consequences of AI technologies. She consistently highlights the disproportionate impact of these technologies on marginalized communities, advocating for a more human-centered approach to AI development. Her research and advocacy propose concrete solutions for fostering responsible AI, including greater transparency, accountability, and the active involvement of diverse stakeholders in the design and governance of AI systems. She stresses the importance of considering the social and political contexts in which AI is deployed and pushing for technologies that genuinely serve the public good rather than exacerbating existing inequalities.

5. Awards and recognition

Timnit Gebru has received numerous prestigious awards and recognitions for her groundbreaking work in AI ethics, fairness, and her dedicated advocacy.

- 2019 AI Innovations Award:** Gebru, along with Joy Buolamwini and Inioluwa Deborah Raji, received VentureBeat-s AI Innovations Award in the "AI for Good" category. This award recognized their significant research highlighting the critical problem of algorithmic bias in facial recognition technology.

- 2021 World's 50 Greatest Leaders:** Fortune magazine named Gebru one of the world's 50 greatest leaders in 2021, acknowledging her influence and impact on the global stage.

- 2021 Nature's 10:** The scientific journal Nature included Gebru in its list of ten scientists who played crucial roles in shaping scientific developments in 2021.

- 2022 Time's 100 Most Influential People:** Time magazine recognized Gebru as one of the 100 most influential people of 2022, further cementing her status as a leading figure in technology and ethics.

- 2023 Great Immigrants Awards:** The Carnegie Corporation of New York honored Gebru with a Great Immigrants Award in 2023, specifically recognizing her significant contributions to the field of ethical artificial intelligence.

- 2023 BBC 100 Women:** In November 2023, she was named to the BBC's 100 Women list, which features 100 inspiring and influential women from around the world.

6. Impact and legacy

Timnit Gebru's impact on the field of artificial intelligence and its broader societal implications is profound and enduring. Her work has fundamentally reshaped the discourse around AI ethics, pushing for greater accountability, transparency, and equity in the development and deployment of these powerful technologies. Her research, particularly on algorithmic bias in facial recognition technology and the risks of large language models, has provided critical empirical evidence that has forced the industry to confront its shortcomings and biases.

Her contentious departure from Google became a watershed moment, exposing deep-seated issues within large tech corporations regarding academic freedom, diversity, and the handling of internal dissent, especially from marginalized employees. This event galvanized widespread support from thousands of academics, civil society members, and even members of the U.S. Congress, leading to increased scrutiny of corporate practices and calls for greater oversight.

The establishment of the Distributed Artificial Intelligence Research Institute (DAIR) is a direct testament to her commitment to independent, community-focused AI research. DAIR's mission to document AI's impact on marginalized groups, with a specific focus on Africa and African immigrants, exemplifies her dedication to ensuring that AI development is equitable and beneficial for all, rather than perpetuating existing inequalities. Her co-founding of Black in AI has created a vital community and advocacy platform, significantly increasing the visibility and well-being of Black researchers in a field historically lacking diversity.

Gebru's critical analysis of AI futurism and her coining of the TESCREAL acronym have challenged the dominant narratives in AI, urging a shift away from speculative and potentially harmful pursuits like artificial general intelligence towards addressing the tangible, immediate ethical concerns of AI's real-world applications. Her legacy is one of unwavering advocacy for human rights and social justice in technology, empowering marginalized communities, and persistently demanding that AI be developed with integrity and a deep understanding of its societal consequences.

7. Selected publications

- Gebru, Timnit (August 1, 2017). Visual computational sociology: computer vision methods and challenges (PhD thesis). Stanford University.

- Gebru, Timnit; Krause, Jonathan; Wang, Yilun; Chen, Duyun; Deng, Jia; Aiden, Erez Lieberman; Fei-Fei, Li (December 12, 2017). "Using deep learning and Google Street View to estimate the demographic makeup of neighborhoods across the United States". Proceedings of the National Academy of Sciences. 114 (50): 13108-13113.

- Buolamwini, Joy; Gebru, Timnit (2018). "Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification". Proceedings of Machine Learning Research. 81: 1-15.

- Gebru, Timnit (July 9, 2020). "Race and Gender". In Dubber, Markus D.; Pasquale, Frank; Das, Sunit (eds.). The Oxford Handbook of Ethics of AI. Oxford University Press. pp. 251-269.

- Gebru, Timnit; Torres, Émile P. (April 1, 2024). "The TESCREAL bundle: Eugenics and the promise of utopia through artificial general intelligence". First Monday. 29 (4).

- Gebru, Timnit; Bender, Emily M.; et al. (2020). "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜". (This paper was central to the controversy surrounding Gebru's departure from Google.)